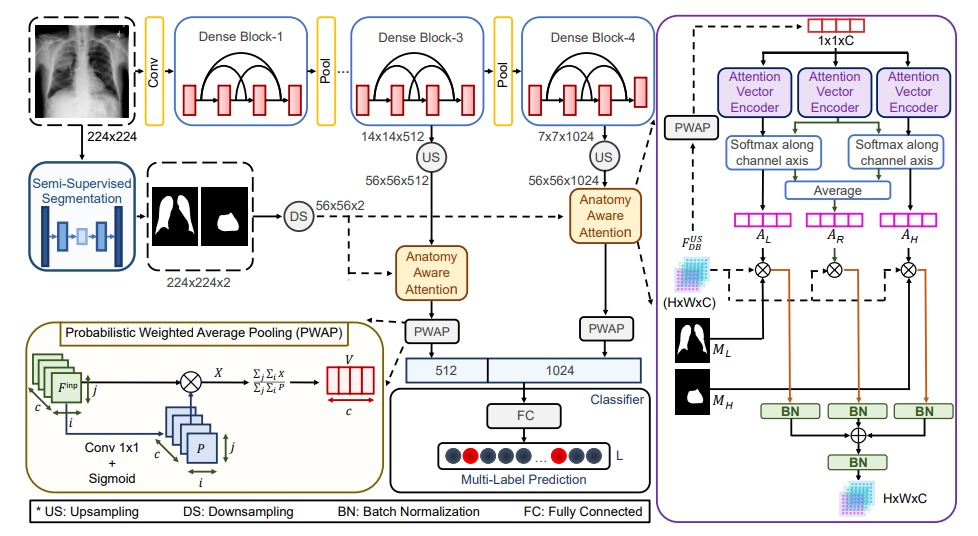

Thoracic disease detection from chest radiographs using deep learning methods has been an active area of research in the last decade. Most previous methods attempt to focus on the diseased organs of the image by identifying spatial regions responsible for significant contributions to the model’s prediction. In contrast, expert radiologists first locate the prominent anatomical structures before determining if those regions are anomalous. Therefore, integrating anatomical knowledge within deep learning models could bring substantial improvement in automatic disease classification. Motivated by this, we propose Anatomy-XNet, an anatomy-aware attention-based thoracic disease classification network that prioritizes the spatial features guided by the pre-identified anatomy regions. We adopt a semi-supervised learning method by utilizing available small-scale organ-level annotation to locate the anatomy regions in large-scale datasets where the organ-level annotations are absent. The proposed Anatomy-XNet uses the pre-trained DenseNet-121 as the backbone network with two corresponding structured modules, the Anatomy Aware Attention (A3) and Probabilistic Weighted Average Pooling (PWAP), in a cohesive framework for anatomical attention learning. We experimentally show that our proposed method sets a new state-of-the-art benchmark by achieving an AUC score of 85.78%, 92.07%, and, 84.04% on three publicly available large-scale CXR datasets–NIH, Stanford CheXpert , and MIMIC-CXR, respectively. This not only proves the efficacy of utilizing the anatomy segmentation knowledge to improve the thoracic disease classification but also demonstrates the generalizability of the proposed framework